Watch: Streaming Chat GPT with Rails + Hotwire

Related videos

In the previous video, we set up a chat service to access ChatGPT and return responses. Now, we're taking it to the next level by enabling streaming responses. This way, as soon as ChatGPT starts responding, you’ll see the messages stream in live.

I've set up a basic UI with a form where you can post your questions. Currently, it doesn't keep any chat context or history - you just ask a question and get an answer.

Let’s dive into building it!

Setting Up Models

The first thing we'll need to do is generate some models to handle conversations and messages.

rails generate model Conversation user:references

rails generate model Message conversation:references role:text content:text

Now, let's run the migrations:

rails db:migrate

Defining Relationships

We'll need to set up associations between our models.

Conversation Model should look like this:

class Conversation < ApplicationRecord

belongs_to :user

has_many :messages, dependent: :destroy

end

And Message Model will have:

class Message < ApplicationRecord

belongs_to :conversation

end

Creating Conversations

Next, we need to ensure that a conversation is created when a user visits the home page. We’ll do this in the PagesController.

pages_controller.rb:

class PagesController < ApplicationController

skip_before_action :authenticate

def home

@conversation = Current.user.conversations.first_or_create!

end

end

Also, in the User Model:

class User < ApplicationRecord

# ...

has_many :conversations, dependent: :destroy

# ...

end

Adjusting the Form

We need to pass the conversation_id through the form. Here’s how we can set a hidden field in our form.

home.html.erb:

<%= form_with url: chats_path, local: true do |form| %>

<%= hidden_field_tag :conversation_id, @conversation.id %>

<%= form.text_field :content %>

<%= form.submit "Post" %>

<% end %>

If we inspect the form, we should see the conversation ID included as a hidden field:

Handling Messages

Now, we'll update the chat controller to permit the conversation_id and create new messages.

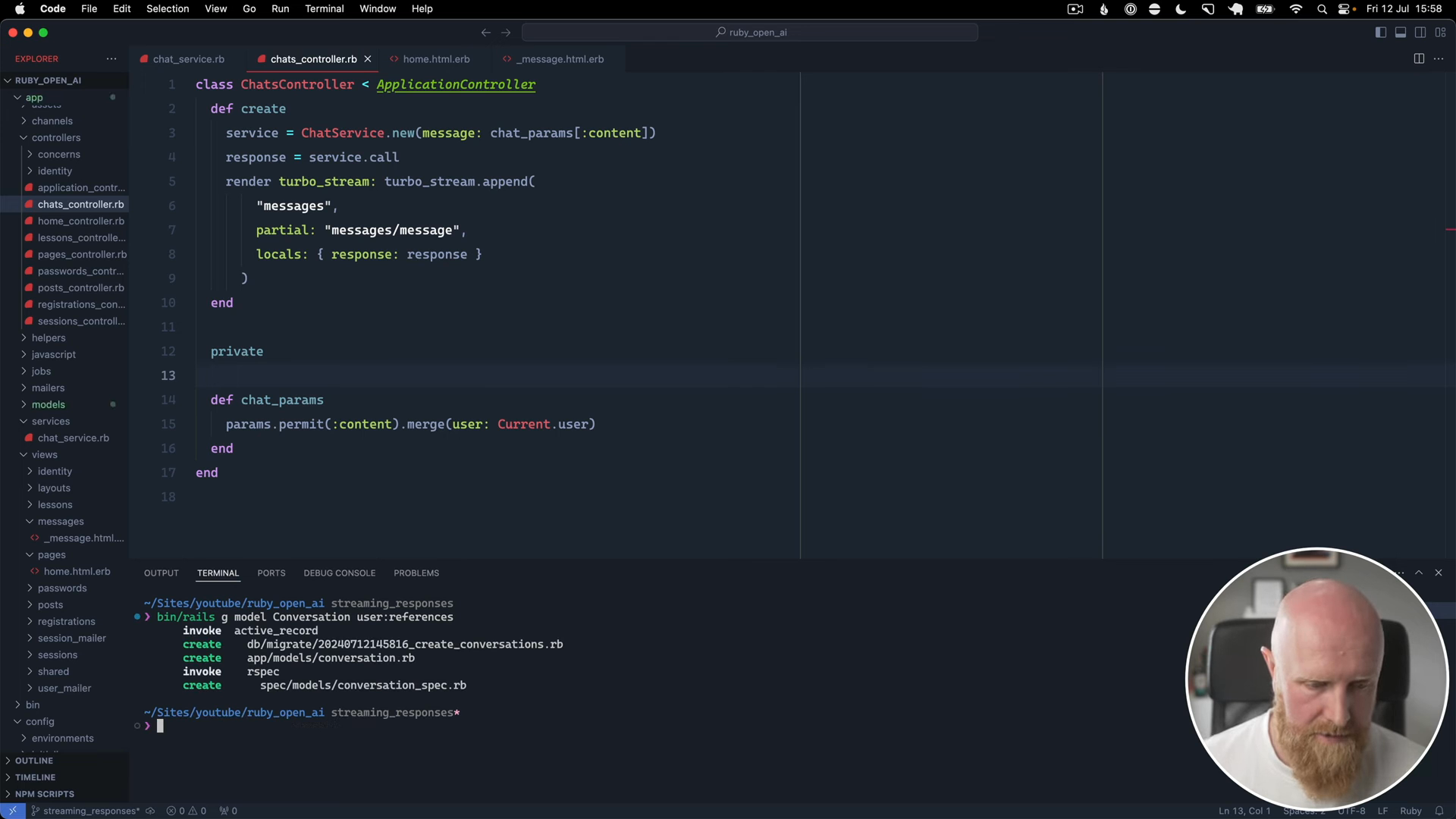

chats_controller.rb:

class ChatsController < ApplicationController

def create

conversation = Current.user.conversations.find(chat_params[:conversation_id])

message = conversation.messages.create!(

role: "user",

content: chat_params[:content]

)

ChatService.new(conversation: conversation, message: message).call

head :no_content

end

private

def chat_params

params.permit(:content, :conversation_id).merge(user: Current.user)

end

end

Chat Service Refinement

In our ChatService, we'll adjust it to handle messages from conversations.

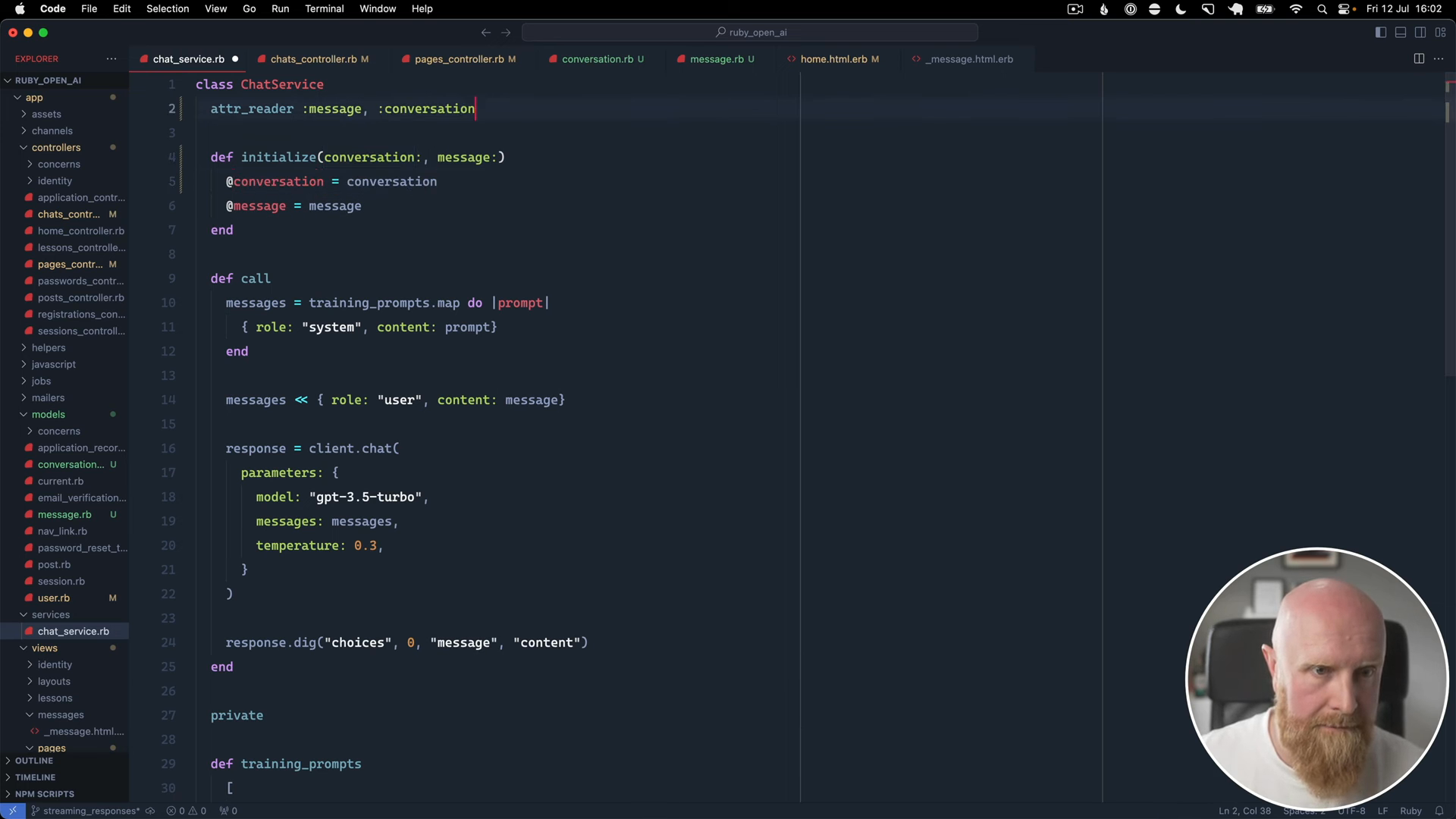

chat_service.rb:

class ChatService

attr_reader :message, :conversation

def initialize(conversation:, message:)

@conversation = conversation

@message = message

end

def call

messages = training_prompts.map do |prompt|

{ role: "system", content: prompt}

end

conversation.messages.each do |message|

messages << { role: message.role, content: message.content }

end

response = client.chat(

parameters: {

model: "gpt-3.5-turbo",

messages: messages,

temperature: 0.3

}

)

conversation.messages.create!(

role: "assistant",

content: response.dig("choices", 0, "message", "content")

)

true

end

# ...

end

Turbo Streams for Real-time Updates

We need to set up Turbo Streams to broadcast messages in real-time.

Update message.rb:

class Message < ApplicationRecord

belongs_to :conversation

after_create_commit -> { broadcast_created }

after_update_commit -> { broadcast_updated }

def broadcast_created

broadcast_append_to(

conversation,

partial: "messages/message",

locals: { message: self },

target: "messages"

)

end

def broadcast_updated

broadcast_replace_to(

conversation,

partial: "messages/message",

locals: { message: self },

target: "message_#{id}"

)

end

end

And our message partial _message.html.erb:

<div id="message_<%= message.id %>" class="py-5 px-6 rounded-md mt-4 mr-10 bg-gray-100 text-gray-700">

<%= message.content %>

</div>

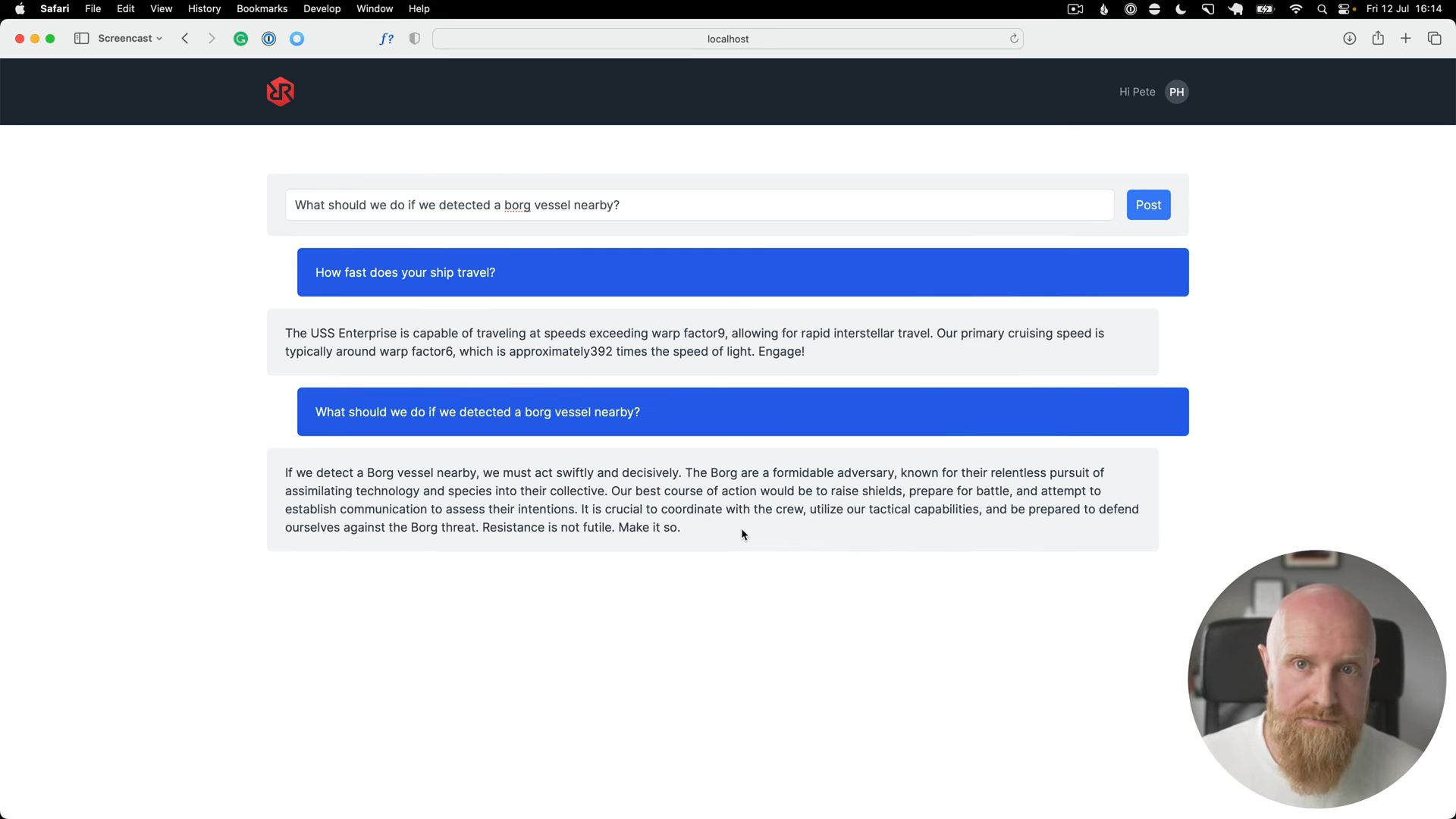

Styling Messages

To differentiate between user and assistant messages, we’ll add some basic styles:

_message.html.erb:

<div id="message_<%= message.id %>" class="py-5 px-6 rounded-md mt-4 <%= message.role == "user" ? "ml-10 bg-blue-600 text-white" : "mr-10 bg-gray-100 text-gray-700" %>">

<%= message.content %>

</div>

Streaming Setup

Finally, we'll set up streaming in our ChatService:

chat_service.rb (continued):

class ChatService

attr_reader :message, :conversation

def initialize(conversation:, message:)

@conversation = conversation

@message = message

end

def call

messages = training_prompts.map do |prompt|

{ role: "system", content: prompt}

end

conversation.messages.each do |message|

messages << { role: message.role, content: message.content }

end

new_message = conversation.messages.create!(

role: "assistant",

content: ""

)

response = client.chat(

parameters: {

model: "gpt-3.5-turbo",

messages: messages,

temperature: 0.3,

stream: proc do |chunk, _bytesize|

text = chunk.dig("choices", 0, "delta", "content")

if text.present?

new_message.content += text

new_message.save

end

end

}

)

true

end

# ...

end

Now, if we reload the page and ask a question, the responses will start streaming in!

Conclusion

With Turbo Streams and Rails, integrating a streaming chat feature using ChatGPT is straightforward. It feels snappy when texts stream in rather than waiting for the entire response.

I hope you found this tutorial useful! If you want to see more about integrating ChatGPT with your Rails app, let me know in the comments.

Stay tuned for more!